Projects

A selection of projects...

Meta Quest Pro Eye Tracking Test Suite

Furthered research with a goal of creating a standardized test suite for the eye tracking technology in various mixed reality headsets, specifically for scenarios with varying degrees of movement. Designed and executed an evaluation and calibration method for the Meta Quest Pro. Analyzed the effects of movement, as well as a virtual reality versus an augmented reality environment, on visual perception.

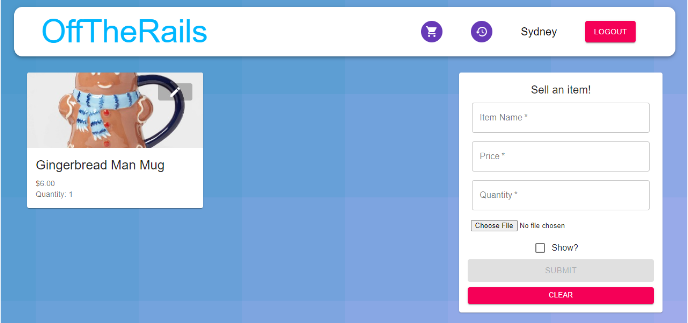

OffTheRails Online Store

Worked in a team of six to develop a Ruby on Rails online store web application deployed on AWS Elastic Beanstalk. Analyzed the application's scalability by identifying bottlenecks and applying various optimizations. Conducted cost analysis to find the optimal EB instance configuration through Tsung load testing.

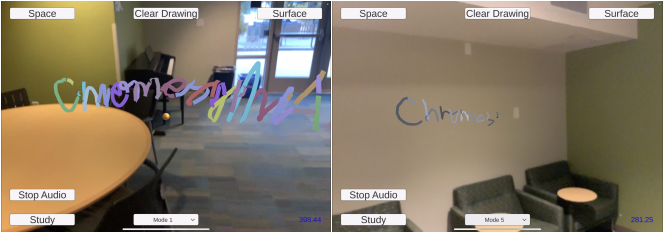

ChromesthesiAR

Worked in a team of three to develop a Unity AR application for iOS. Developed an AR drawing application to explore chromesthesia. The application allowed the user to draw either in 3D space or on a 2D surface. The device analyzed sounds in the user's surroundings and modified the brush color accordingly. Conducted a user study to investigate associations between color and sound.

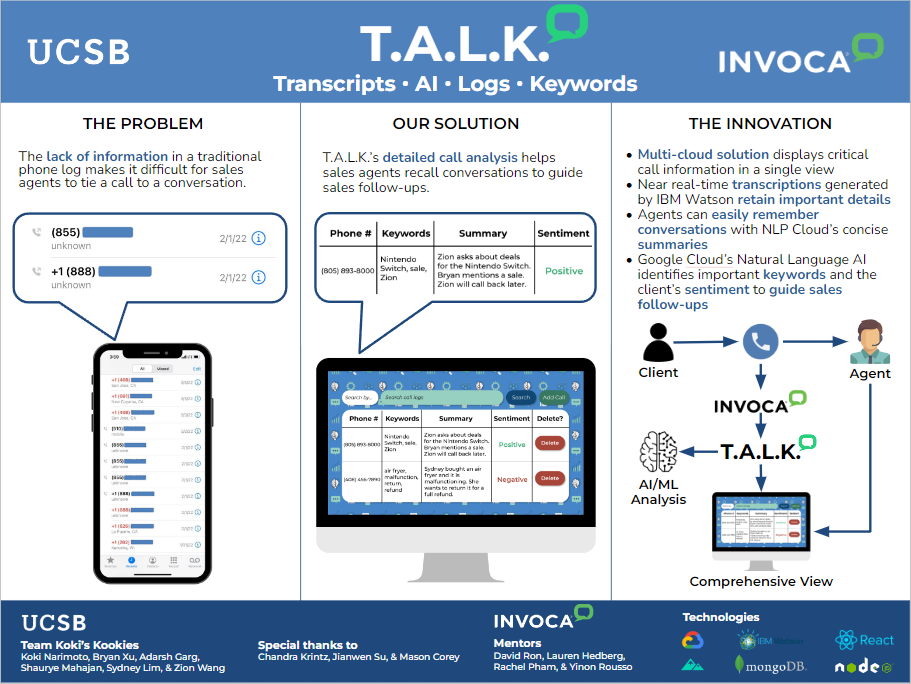

T.A.L.K.

Worked in a team of six to develop an Express web application deployed on Heroku to be used by salespeople. Designed a multi-cloud solution that displays critical call information in a single view. Utilized transcriptions generated by IBM Watson in near real-time that retain important call details. Leveraged NLP Cloud to generate concise call summaries so that salespeople can easily remember call contents. Determined keywords and the customer's sentiment using Google Cloud's NLP API to guide sales follow-ups. Integrated Invoca's APIs service to retrieve call transcripts and store the data in a MongoDB database.

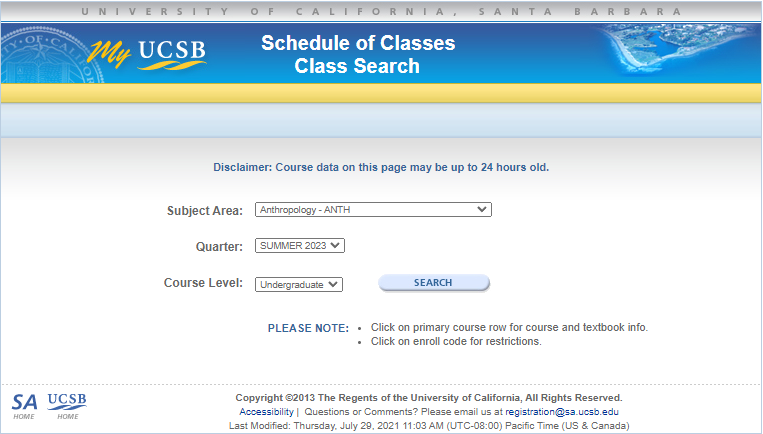

UCSB Courses Search

Worked with a team of ~20 people on this legacy project, a web application used to search for classes based on input criteria. Collaborated with a subteam of 5 people to focus on improving the search user interface.

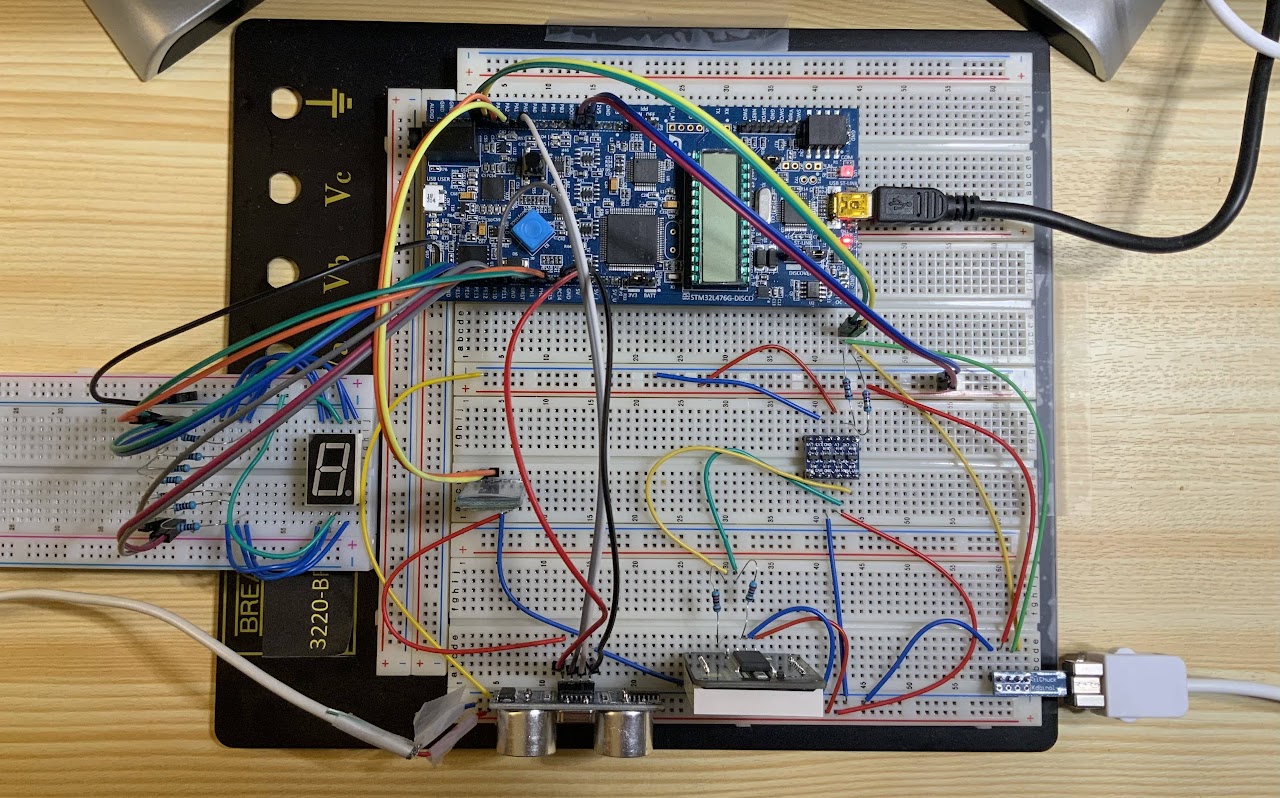

Vision Test

Designed a “vision test” that simulates a tumbling E chart with symbols gradually decreasing in size. Displayed the E's on an 8x8 LED Matrix. Connected a Wii Nunchuk to allow a user to input the direction that the E is facing. Utilized a distance sensor to verify that the user is standing at an appropriate distance from the display. Utilized a terminal to display the user's vision score. Allowed for communication between both the 8x8 LED Matrix and the Wii Nunchuk with an STM32 microcontroller using I2C. Allowed for communication between the terminal and an STM32 microcontroller using SPI.

COVID-19 Survival Naive Bayes Classifier

Designed a Naive Bayes Classifier in Python that determines whether a patient will survive from COVID-19 given their preconditions. Preprocessed and cleansed training and validation data sets using NumPy and SciPy. Constructed a model to determine which data fields were of greater importance. Placed second on the class leader board for classification accuracy and runtime.